Jinshan Pan Jiangxin Dong Yang Liu Jiawei Zhang Jimmy Ren Jinhui Tang Yu-Wing Tai Ming-Hsuan Yang

Abstract

We present an algorithm to directly solve numerous image restoration problems (e.g., image deblurring, image dehazing, image deraining, etc.). These problems are highly ill-posed, and the common assumptions for existing methods are usually based on heuristic image priors. In this paper, we find that these problems can be solved by generative models with adversarial learning. However, the basic formulation of generative adversarial networks (GANs) does not generate realistic images, and some structures of the estimated images are usually not preserved well. Motivated by an interesting observation that the estimated results should be consistent with the observed inputs under the physics models, we propose a physics model constrained learning algorithm so that it can guide the estimation of the specific task in the conventional GAN framework. The proposed algorithm is trained in an end-to-end fashion and can be applied to a variety of image restoration and related low-level vision problems. Extensive experiments demonstrate that our method performs favorably against state-of-the-art algorithms.

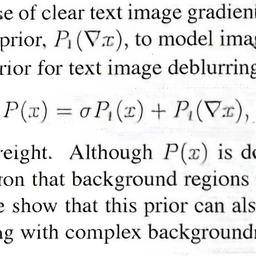

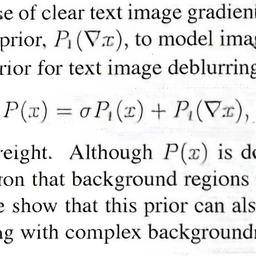

Image Deblurring

|

|

|

| Blurred image | Hradiš et al. | Ours |

|

|

|

| Blurred image | DeblurGAN | Ours |

|

|

|

| Blurred image | Pan et al. | Ours |

Image Dehazing

|

|

|

| Hazy image | GAN dehazing | Ours |

Image Deraining

|

|

|

| Rainy image | GAN deraining | Ours |

Proposed Algorithm

Visual Comparisons

Image Deblurring

|

|

|

|

| Blurred image | Xu et al. | Pan et al. (TPAMI 2017) | Pan et al. (ECCV 2014) |

|

|

|

|

| Zhang et al. | DeblurGAN | PCycleGAN | Ours |

Image Dehazing

|

|

|

|

| Hazy image | He et al. | Meng et al. | Berman et al. |

|

|

|

|

| Chen et al. | Cai et al. | Ren et al. | Ours |

Image Deraining

|

|

|

|

| Rainy image | Li et al. | Zhang et al. | Fu et al. |

|

|

|

|

| Yang et al. | pix2pix | CycleGAN | Ours |

Technical Papers, Codes, and Datasets

References

[1] I. J. Goodfellow, J. Pouget-Abadie, M. Mirza, B. Xu, D. Warde Farley, S. Ozair, A. C. Courville, and Y. Bengio, “Generative adversarial nets,” in NIPS, 2014, pp. 2672–2680.

[2] P. Isola, J.-Y. Zhu, T. Zhou, and A. A. Efros, “Image-to-image translation with conditional adversarial networks,” in CVPR, 2017, pp. 1125–1134.

[3] M. Hradis, J. Kotera, P. Zemc´ık, and F. Sroubek, “Convolutional neural networks for direct text deblurring,” in BMVC, 2015, pp. 6.1–6.13.

[4] S. Nah, T. Hyun Kim, and K. Mu Lee, “Deep multi-scale convolutional neural network for dynamic scene deblurring,” in CVPR, 2017, pp. 3883–3891.

[5] L. Xu, S. Zheng, and J. Jia, “Unnatural L0 sparse representation for natural image deblurring,” in CVPR, 2013, pp. 1107–1114.

[6] J. Pan, D. Sun, H. Pfister, and M.-H. Yang, “Blind image deblurring using dark channel prior,” in CVPR, 2016, pp. 1628–1636.

[7] O. Kupyn, V. Budzan, M. Mykhailych, D. Mishkin, and J. Matas, “Deblurgan: Blind motion deblurring using conditional adversarial networks,” in CVPR, 2018, pp. 8183–8192.

[8] O. Whyte, J. Sivic, A. Zisserman, and J. Ponce, “Non-uniform deblurring for shaken images,” International Journal of Computer Vision, vol. 98, no. 2, pp. 168–186, 2012.

[9] L. Xu, J. S. J. Ren, Q. Yan, R. Liao, and J. Jia, “Deep edge-aware filters,” in ICML, 2015, pp. 1669–1678.

[10] J. Pan, Z. Hu, Z. Su, and M.-H. Yang, “L0-regularized intensity and gradient prior for deblurring text images and beyond,” IEEE TPAMI, vol. 39, no. 2, pp. 342–355, 2017.

[11] J.-Y. Zhu, T. Park, P. Isola, and A. A. Efros, “Unpaired image-toimage translation using cycle-consistent adversarial networks,” in ICCV, 2017, pp. 2223–2232.

[12] S. Cho and S. Lee, “Fast motion deblurring,” in SIGGRAPH Asia, vol. 28, no. 5, 2009, p. 145.

[13] C. Dong, C. C. Loy, K. He, and X. Tang, “Learning a deep convolutional network for image super-resolution,” in ECCV, 2014, pp. 184–199.

[14] J. Zhang, J. Pan, J. Ren, Y. Song, L. Bao, R. W. Lau, and M.-H. Yang, “Dynamic scene deblurring using spatially variant recurrent neural networks,” in CVPR, 2018, pp. 2521–2529.

[15] C. Ledig, L. Theis, F. Huszar, J. Caballero, A. Cunningham, A. Acosta, A. Aitken, A. Tejani, J. Totz, Z. Wang, and W. Shi, “Photo-realistic single image super-resolution using a generative adversarial network,” in CVPR, 2017, pp. 4681–4690.

[16] K. He, J. Sun, and X. Tang, “Single image haze removal using dark channel prior,” in CVPR, 2009, pp. 1956–1963.

[17] D. Berman, T. Treibitz, and S. Avidan, “Non-local image dehazing,” in CVPR, 2016, pp. 1674–1682.

[18] C. Chen, M. N. Do, and J. Wang, “Robust image and video dehazing with visual artifact suppression via gradient residual minimization,” in ECCV, 2016, pp. 576–591.

[19] W. Ren, S. Liu, H. Zhang, J. Pan, X. Cao, and M.-H. Yang, “Single image dehazing via multi-scale convolutional neural networks,” in ECCV, 2016, pp. 154–169.

[20] B. Cai, X. Xu, K. Jia, C. Qing, and D. Tao, “Dehazenet: An end-to-end system for single image haze removal,” IEEE TIP, vol. 25, no. 11, pp. 5187–5198, 2016.

[21] G. Meng, Y. Wang, J. Duan, S. Xiang, and C. Pan, “Efficient image dehazing with boundary constraint and contextual regularization,” in ICCV, 2013, pp. 617–624.